Ranking Methodology: criteria (cost, reach, criticality, persistence)

To rank the “biggest cyberattacks in history,” I applied four criteria that, combined, give a truer picture than a single damage figure:

| Criterion | Definition | Indicators | Weight |

|---|---|---|---|

| Cost | Direct and indirect impact | € lost, fines, litigation | 35% |

| Reach | Countries/sectors affected | # organizations, countries | 25% |

| Criticality | Infrastructure and outages | Time out of service | 25% |

| Persistence | Stealth and complexity | Days undetected, 0-days | 15% |

2010–2025 Timeline: from industrial sabotage to a global wiper

2007–2008: DDoS attacks on Estonia and Georgia open the door to “digital support” in conflicts.

2010: Stuxnet proves malware can damage industrial equipment (ICS/OT).

2013–2014: Yahoo breaches (billions of accounts) escalate concern over PII.

2014: Sony Pictures brings a highly public political dimension.

2015–2016: Power cuts in Ukraine reveal operations against critical infrastructure.

2017: The black swan year. WannaCry (global ransomware) and NotPetya (supply-chain wiper) reset the rules.

2019–2020: Large-scale supply chain compromises (SolarWinds) with a focus on espionage.

2021–2025: Cartelized ransomware, double extortion, attacks on SaaS/IT providers, and campaigns mixing crime and geopolitics.

For me, 2017 was the turning point: NotPetya made it painfully clear we’re not as resilient as we think, and that a single critical dependency (accounting/tax, logistics, IT) can freeze half a country.

Top 10 Historic Cyberattacks (with takeaways)

1) NotPetya (2017) — the wiper that froze Ukraine and rippled worldwide

What happened. Attackers compromised the update mechanism of M.E.Doc (widely used Ukrainian tax software). The payload unpacked as a worm: credential dumping with Mimikatz, lateral movement via EternalBlue/EternalRomance, then MBR tampering and disk encryption patterns that made recovery infeasible. The ransom note was camouflage—no working decryptor existed.

Why it mattered. It turned a niche supplier into a single point of failure for a country, then leapt globally through multinational networks. It showed how “IT housekeeping” issues (flat networks, legacy SMB, over-privileged service accounts) can become national-scale outages.

TTPs to know. Supply-chain compromise; signed or trusted updates; LSASS access; SMB/RPC lateral movement; scheduled tasks and PsExec; destructive MBR/boot changes.

Detection clues. Unusual outbound traffic after updates; spikes in SMB sessions; LSASS handle access; sudden creation of scheduled tasks across many hosts; fake “disk repair” messages before reboot.

What would have changed the outcome.

Strict network segmentation and deny-by-default for SMB between segments.

LSA Protection/LAPS, tiered admin and PAWs to contain creds.

Immutable backups + frequent restore drills; golden-image rebuilds.

Supplier due diligence: signed updates, SBOM, build integrity evidence.

Personal note: identifying the

perfckill switch (read-only file) proved how a tiny technical detail can buy time when everything else is on fire.2) WannaCry (2017) — planet-scale ransomware at worm speed

What happened. Automated exploitation of SMBv1 (EternalBlue) delivered ransomware that self-propagated with minimal human interaction. Critical services (including healthcare) were hit within hours.

Why it mattered. It was the clearest demonstration that patch latency on internet-reachable or internally widespread protocols translates directly into business downtime.

TTPs to know. SMBv1 remote code execution; basic persistence; rapid encryption of common extensions; crude but effective worming logic.

Detection clues. Surges in port 445 traffic; anomalous scanning from a single host to many; sudden spikes in file rename/write operations.

What would have changed the outcome.

Retiring SMBv1 and hardening SMB.

Accelerated patching for edge-exposed services; maintenance windows sized to risk.

EDR rules for worm-like behavior; isolation playbooks to quarantine first, ask later.

3) Stuxnet (2010) — the first industrial cyber-weapon

What happened. Multi-stage malware leveraged several 0-days and stolen certificates to infiltrate air-gapped environments via USB, then targeted PLC logic to subtly alter physical processes while spoofing operator screens.

Why it mattered. It proved malware can cause physical degradation without obvious alarms, and that engineering workstations and ladder logic are part of the threat surface.

TTPs to know. LNK and Print Spooler 0-days (historically), code-signing abuse, PLC payloads, rootkits for ICS.

Detection clues. Unexpected ladder logic changes; mismatches between telemetry and HMI displays; unsigned/odd drivers on engineering hosts.

What would have changed the outcome.

IT/OT segregation, jump-server patterns, unidirectional gateways.

Change control specific to PLC projects; out-of-band validation of sensor data.

Application allow-listing and driver signing enforcement on engineering stations.

4) SolarWinds (2020) — supply-chain espionage

What happened. A trusted enterprise IT platform shipped trojanized updates, granting covert access to thousands of networks. Post-compromise, operators used living-off-the-land techniques to remain stealthy.

Why it mattered. It showed that a single vendor build pipeline can become a force multiplier for long-term, low-noise espionage across public and private sectors.

TTPs to know. Build system tampering; signed malicious DLLs; SAML token abuse; selective C2 with very low noise.

Detection clues. Rare parent-child process chains on the platform’s services; unusual Azure AD/IdP token flows; beaconing with long sleep intervals.

What would have changed the outcome.

Build integrity (isolated signers, reproducible builds, attestations).

SBOM distribution; update telemetry and anomaly scoring.

Zero Trust on east-west traffic and identity; continuous verification of IdP assumptions.

5) Yahoo (2013–2014) — the largest account breach

What happened. Attackers harvested account data at unprecedented scale by chaining app weaknesses, credential weaknesses, and token/session handling issues.

Why it mattered. It reset expectations about the scope and longevity of PII breaches and their valuation impact years later.

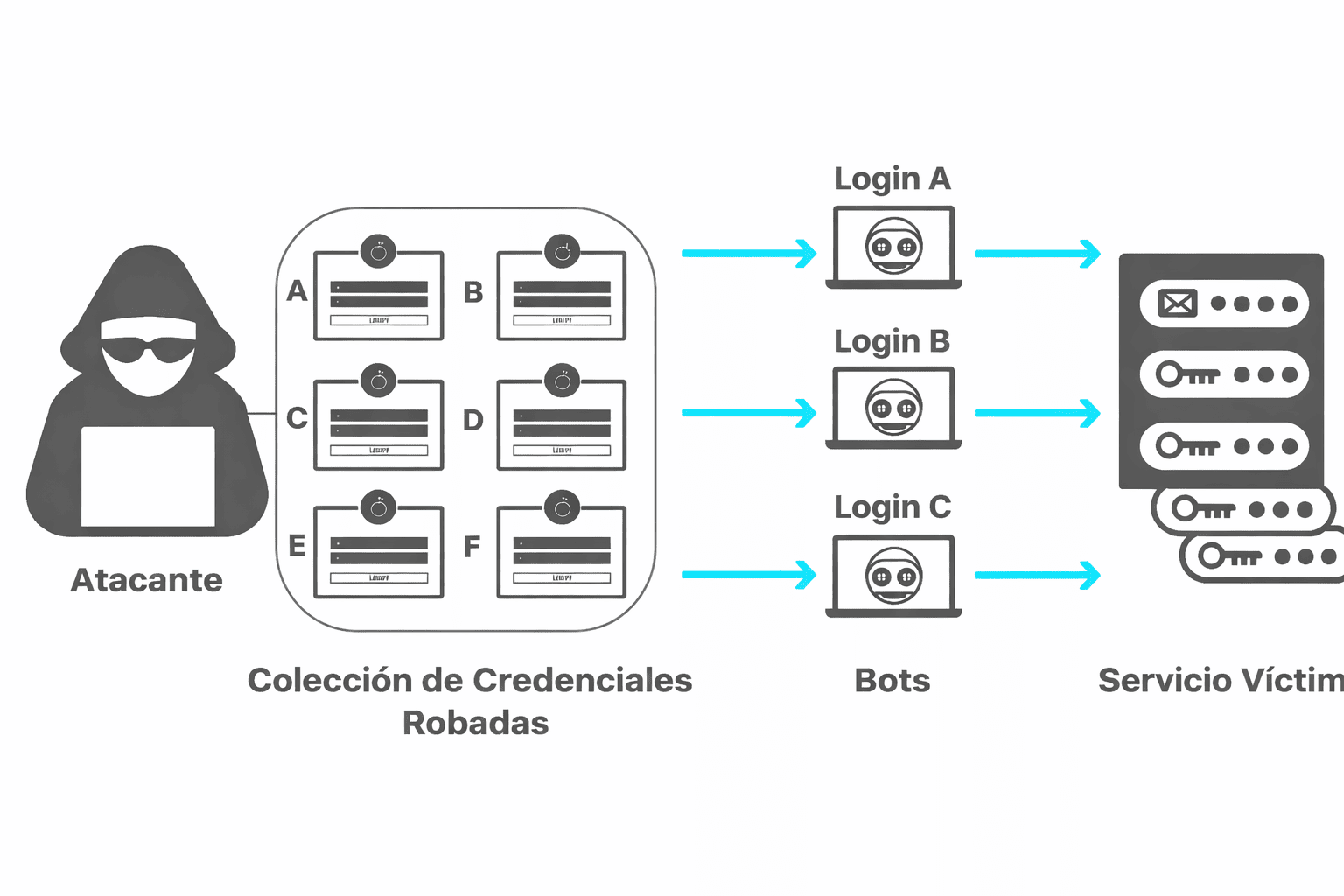

TTPs to know. Session fixation/forgery patterns; weak hashing for legacy datasets; credential stuffing follow-ons.

Detection clues. Anomalous authentication patterns from shared ASNs; token reuse outside expected lifetimes; high-volume profile reads.

What would have changed the outcome.

Argon2/bcrypt with strong parameters; rotate legacy hashes.

Risk-based authentication and anomaly detection.

Session lifecycle hygiene and key rotation by default.

6) Equifax (2017) — known vulnerability, massive impact

What happened. An internet-facing application with a known critical vulnerability remained unpatched; attackers exfiltrated sensitive PII through the app layer.

Why it mattered. It showcased how asset discovery gaps and weak patch governance can eclipse any number of downstream controls.

TTPs to know. Web RCE, web shells, data staging and exfil over HTTPS.

Detection clues. Odd user-agent strings; long-lived HTTPS sessions to little-known hosts; spikes in DB reads off business hours.

What would have changed the outcome.

Continuous asset inventory; SLA-driven patching by CVSS + exploitability.

Runtime protection (WAF/RASP) tuned to the stack.

Tabletop exercises focused on PII breach response.

7) Sony Pictures (2014) — leaks and destructive sabotage

What happened. Social engineering and footholds led to domain-wide expansion, data theft, and destructive wiping of many endpoints/servers, plus staged leaks to maximize reputational harm.

Why it mattered. It mixed destruction with information operations, forcing organizations to plan for technical + PR + legal crises in parallel.

TTPs to know. Phishing, credential reuse, domain escalation, data staging, wiper deployment.

Detection clues. Sudden large SMB copies; archival utilities running on non-backup hosts; mass creation of scheduled tasks; spikes in endpoint reimages.

What would have changed the outcome.

Tiered admin and PAWs; DLP with meaningful policies.

Data segmentation and need-to-know access.

A communications crisis plan rehearsed with legal and PR.

8) Estonia (2007) — country-scale DDoS

What happened. A wave of coordinated DDoS knocked out government portals, banks, and media sites, overwhelming upstream capacity and local infra.

Why it mattered. It was an early lesson in national digital resilience and the need for pre-arranged DDoS partnerships.

TTPs to know. Botnet-driven volumetric floods; application-layer requests at scale; reflector/amplifier abuse.

Detection clues. Sudden surges from diverse global IPs; SYN floods; spikes in 502/503s; upstream congestion alerts.

What would have changed the outcome.

Contracts with scrubbing centers; anycast and geo load-balancing.

Rate-limiting and caching strategies; crisis comms channels outside the primary domain.

9) Georgia (2008) — hybrid-warfare prelude

What happened. DDoS and defacements aligned with kinetic operations to degrade information flows and public confidence.

Why it mattered. It established cyber as a standard theatre in geopolitical crises, pressuring response coordination across civil, military, and private operators.

TTPs to know. Website defacement chains; DDoS; opportunistic compromises of media/government CMS.

Detection clues. Admin logins from atypical geos; spikes in web POSTs; DNS tampering attempts.

What would have changed the outcome.

Inter-agency exercises; pre-approved fallback sites and broadcast channels.

Managed DNS with locked registrar settings; WAF/CDN failover plans.

10) Cadena SER (2019) — local but consequential newsroom outage

What happened. Ransomware disrupted editorial systems, forcing manual workflows and impacting broadcasting schedules.

Why it mattered. A reminder that media uptime is public-interest infrastructure and that newsroom IT often mixes legacy stacks with modern SaaS.

TTPs to know. Phishing footholds; lateral movement to file servers; rapid encryption of shared volumes.

Detection clues. Burst of file renames; spikes in CPU/disk on NAS; EDR flags for mass encryption patterns.

What would have changed the outcome.

Hardened endpoint baselines and privilege hygiene.

Segregated broadcast-critical segments with restrictive ACLs.

Practiced manual continuity and image-based rapid restore for studios.

How Major Attacks Spread: worms, exploits, and lateral movement

Large-scale impact rarely comes from a single phish. It’s the automation + reach that turns a foothold into a crisis.

Worms: automate discovery and exploitation (e.g., WannaCry/NotPetya). Once inside, they scan, replicate, and trigger encryption or wiping with minimal human input.

Credentials: tools like Mimikatz harvest credentials from memory (LSASS) and cached secrets. Without LSA Protection, tiered admin, and LAPS, one endpoint can unlock the whole estate.

Network exploits: SMB/RDP/VPN bugs accelerate spread across flat networks. Old protocols (SMBv1) and weak segmentation are force multipliers.

ATT&CK tactics: Lateral Movement (Pass-the-Hash/Ticket, PsExec, WMI), Privilege Escalation, Defense Evasion (tamper AV/EDR), and stealthy Command & Control (long sleep intervals, domain fronting).

From experience, the bottleneck isn’t “detection exists?” but reaction speed. If your EDR shouts while the network still allows free east-west movement, the attacker wins on velocity.

| Technique | How it works | Signals | Defense |

|---|---|---|---|

| Phishing / Social engineering | Delivers payload or harvests creds via email/SMS | Unusual clicks, obfuscated attachments | Training, sandboxing, DMARC/DKIM/SPF |

| Supply-chain compromise | Trojanized updates or tampered dependencies | Odd traffic post-update, hash/signature mismatches | Signed updates, SBOM, build-integrity attestations |

| Exploiting remote services | SMB/RDP/VPN flaws; internet-exposed edge | Port 445/3389 spikes; mass auth attempts | Patching, disable SMBv1, MFA, segmentation |

| Credential dumping | Reads LSASS; extracts hashes/tickets | LSASS access, known IOCs | LSA Protection, EDR/XDR, LAPS, tiered admin |

| Lateral movement | PsExec/WMI; Pass-the-Hash/Ticket | Admin remote tools on unusual hosts | Micro-segmentation, MFA, block unneeded protocols |

| Persistence | Tasks/services/registry run at boot | New autoruns without change tickets | Change control, application allow-listing |

| Exfiltration | HTTP(S) or DNS tunneling | Traffic to rare domains; volume anomalies | DLP, proxy inspection with governance |

| Wiper / MBR overwrite | Irreversible data/boot corruption | Fake “disk repair” banner; mass reboots | Immutable backups; immediate isolation |

| Stealthy C2 | Long sleeps; blends with legit traffic | Periodic beacons; odd JA3/UA fingerprints | Threat intel, reputation blocking, EDR |

| Living off the Land | Native tools (PowerShell, WMI) abused | Signed scripts in unusual contexts | ConstrainedLanguage, logging, allow-listing |

Supply-chain attacks: the M.E.Doc lesson and why SMEs are targets

Uncomfortable truth: your security equals that of your weakest supplier. In my case, watching a mandatory tax app turn into a beachhead was a wake-up call. SMEs often think “Why would anyone target us?” The answer: you’re the bridge to larger prey.

What to demand from suppliers (and write into contracts):

Evidence of build security (isolated signers, protected pipelines, reproducible builds).

SBOM and vulnerability advisories; patching SLAs by criticality.

Audit rights, security questionnaires with proof, and a Plan B if the update channel is compromised.

Incident notification SLAs with secure channels and contact trees.

Actionable lessons: what we wish we’d had before the disaster

Segmentation & micro-segmentation (default-deny SMB between segments).

Identity security: MFA everywhere, LAPS, tiered admin, PAWs for privileged work.

Accelerated patching for internet-exposed services and lateral-movement vectors.

Immutable backups + quarterly restore drills with real RTO/RPO.

EDR/XDR tuned for credential dumping and lateral movement.

Application allow-listing on critical servers and sensitive endpoints.

Centralized telemetry (SIEM) and response playbooks rehearsed.

SecDevOps and supply-chain hardening (signatures, SBOM, attestations).

Zero Trust: verify explicitly; assume breach; limit blast radius.

Culture & training: tabletop exercises, clear roles, decision rights.

From experience: segmenting and practicing restores isn’t glamorous, but it saves businesses.

Tables of interest

| Year | Case | Category | Impact |

|---|---|---|---|

| 2007 | Estonia | DDoS | Disruption of government/financial services |

| 2008 | Georgia | Hybrid warfare | DDoS/defacement during hostilities |

| 2010 | Stuxnet | ICS/OT | Physical damage to industrial equipment |

| 2013–2014 | Yahoo | Data breach | User accounts compromised at massive scale |

| 2014 | Sony Pictures | Wiper + leaks | Large-scale leaks and device wiping |

| 2015–2016 | Ukraine power outages | Critical infrastructure | Interruptions to electricity supply |

| 2017 | WannaCry | Ransomware | Worldwide spread via SMBv1 |

| 2017 | NotPetya | Wiper | Global shutdowns and domino effects |

| 2020 | SolarWinds | Supply chain | Persistent access across many organizations |

| 2021–2025 | Trends | Cartelized ransomware | Double extortion; attacks on SaaS/IT providers |

| Rank | Case (year) | Vector | Reach | Type | Lesson |

|---|---|---|---|---|---|

| 1 | NotPetya (2017) | Supply chain (M.E.Doc) | Global, multi-sector | Wiper | Segmentation + immutable backups |

| 2 | WannaCry (2017) | SMBv1 exploit (EternalBlue) | Global | Ransomware | Accelerate critical patching |

| 3 | Stuxnet (2010) | 0-days / USB / ICS | OT/SCADA | Industrial sabotage | Segregate IT/OT; monitor engineering |

| 4 | SolarWinds (2020) | Compromised update | Many orgs, public/private | Espionage | Signatures, SBOM, build integrity |

| 5 | Yahoo (2013–2014) | Credentials/API | Billions of accounts | Data breach | Strong hashing & anomaly detection |

| 6 | Equifax (2017) | Unpatched web vuln (Struts) | Consumer PII | Data breach | Vuln mgmt & tuned WAF |

| 7 | Sony Pictures (2014) | Phishing / destructive actions | Media & entertainment | Wiper + leaks | DLP, segregation, crisis comms |

| 8 | Estonia (2007) | Coordinated DDoS | Country services | DDoS | Scrubbing, anycast, redundancy |

| 9 | Georgia (2008) | DDoS / defacement | Government & media | Hybrid warfare | Inter-agency crisis readiness |

| 10 | Cadena SER (2019) | Ransomware | Spanish media | Ransomware | Business continuity for newsrooms |

| Technique | How it works | Signals | Defense |

|---|---|---|---|

| Phishing / Social engineering | Delivers payload or harvests creds via email/SMS | Unusual clicks, obfuscated attachments | Training, sandboxing, DMARC/DKIM/SPF |

| Supply-chain compromise | Trojanized updates or tampered dependencies | Odd traffic post-update, hash/signature mismatches | Signed updates, SBOM, build-integrity attestations |

| Exploiting remote services | SMB/RDP/VPN flaws; internet-exposed edge | Port 445/3389 spikes; mass auth attempts | Patching, disable SMBv1, MFA, segmentation |

| Credential dumping | Reads LSASS; extracts hashes/tickets | LSASS access, known IOCs | LSA Protection, EDR/XDR, LAPS, tiered admin |

| Lateral movement | PsExec/WMI; Pass-the-Hash/Ticket | Admin remote tools on unusual hosts | Micro-segmentation, MFA, block unneeded protocols |

| Persistence | Tasks/services/registry run at boot | New autoruns without change tickets | Change control, application allow-listing |

| Exfiltration | HTTP(S) or DNS tunneling | Traffic to rare domains; volume anomalies | DLP, proxy inspection with governance |

| Wiper / MBR overwrite | Irreversible data/boot corruption | Fake “disk repair” banner; mass reboots | Immutable backups; immediate isolation |

| Stealthy C2 | Long sleeps; blends with legit traffic | Periodic beacons; odd JA3/UA fingerprints | Threat intel, reputation blocking, EDR |

| Living off the Land | Native tools (PowerShell, WMI) abused | Signed scripts in unusual contexts | ConstrainedLanguage, logging, allow-listing |

| Control | What to require | Evidence | Frequency |

|---|---|---|---|

| Build integrity | Signed releases; isolated build/signing | Hash + build report/attestation | Per release |

| SBOM | Component list & versions | SPDX/CycloneDX SBOM | Quarterly |

| Vulnerability management | SLAs by criticality; EMER fixes | Patch compliance report | Monthly |

| Access & privileges | Least privilege; SSO/MFA | Access matrix & reviews | Semiannual |

| Logging & telemetry | Update/build logs; secure retention | Signed logs; retention policy | Continuous |

| Incident notification | Time-bound alerts; secure channel | Contractual clause + runbook | On incident |

| Pentest / audit | Internal & external testing | Report + remediation proof | Annual |

| Dependency hygiene | SCA; CVE alerting | SCA report | Per build |

FAQs: Biggest Cyberattacks in History

Which was the most expensive cyberattack in history?

It depends on methodology (direct vs. indirect costs). NotPetya and WannaCry lead for systemic operational damage; Yahoo and Equifax stand out for the scale of data exposed and long-tail penalties.

What’s the difference between ransomware and a wiper?

Ransomware claims decryption after payment (not always true). A wiper is built to irreversibly destroy data or boot records, so there is no working key (e.g., NotPetya).

What is a software supply-chain attack? Examples

Adversaries compromise a vendor’s software or services to reach all its customers. Examples include M.E.Doc/NotPetya (trojanized updates) and SolarWinds (tampered build pipeline).

How can you “vaccinate” during a NotPetya-style outbreak?

Every case is different, but the playbook is: isolate segments, block SMB/RDP, deploy known IOCs, and—if a documented kill switch exists (e.g., read-only perfc for NotPetya)—apply it carefully. Priority one remains recovery readiness and forensics.

Conclusion

The “biggest cyberattacks” aren’t just headlines—they’re operating manuals. The common thread is simple: supply-chain exposure + lateral movement + slow patching + weak recovery practice. If you only start with two things today, make them segmentation and restore drills. Everything else builds on that foundation.